(Updated 4-Jan-24 with light revisions and info about my third and fourth submissions. Small bugfix and clarification added 25-Jan-24. Updated with follow-up article on 28-Apr-24.)

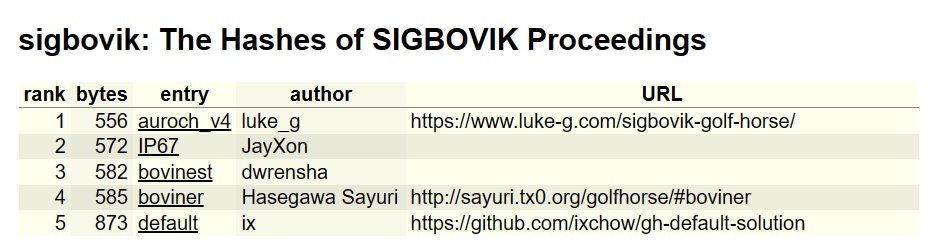

The “SIGBOVIK” problem is the shortest problem in the Golf Horse collection. The goal is to write the shortest JavaScript program that prints out thirteen 64-digit hexadecimal numbers. Despite the apparent simplicity of this challenge, there’s still a surprising amount of depth to it! In this article, I’ll go through how I approached this problem and produced the currently leading solution. A sanitized version of my source is on GitHub.

Note: the name “auroch” is to maintain the spirit of the “bovine” solutions.

The problem

The SIGBOVIK problem is to produce 13 specific SHA-256 checksums, each given as 64 hex digits. The submissions are JavaScript code encoded with UTF-8, and the goal is to minimize the size in bytes. The UTF-8 requirement is extremely important, as we must optimize around the byte cost of Unicode characters represented as UTF-8. (See my previous article about packing data in UTF-8.)

Here is the list of values we must generate:

77ff95a9033c674a35af2d95e4691f64f5a7b922a69b6b3d4cf2ec251d582a68

e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855

800148acb3b3f4434321a3535fecb94ed833ba177707587ed134be05e8b640b0

3884c27ed652f5c3d042eba5b19951d82897e215f1c80a42476f04a7f4f3b886

620a9c0fd74fc416e679a475b6fdabbcb21654d1f027f98de5ec5c5377d9de9f

cf85ce2fb5e61f98ac317603417f062518a270e7368ef1097363673176328406

3f02d2d975740e68ef689134708cc18201c384c52f87163ecc9e14120e1afd51

daa20671027ae772953dae4ea960b0085e6f963224f8444b2ed57991731c54d6

29ba72dc76288baafaeabb04f14815393c9878fce35c4892b3cfd1afe3c72d9a

27d3bcc522f36e27ce550bd4e08270c372d34c6687a5fc674de349b6fccb3fdb

8640e9aa19063144c23ecaf1e2f05a49e656079dc67056de249303f4064234f3

0d8bad5b2b2eabbb4c7af7ef4507bb8a9a4c32c28c1362821897ecae52fd8529

9a4d06cde57b835741ef74c556de2dd2d2ea07e45d027b4e267c1c74c51ac9fbOne of the values is the checksum of the empty file (the one beginning e3b0), and the other 12 are checksums of large documents (from the humorous computing conference SIGBOVIK). Because SHA-256 is designed to be cryptographically secure, we expect that the hex digits are essentially random. Therefore, this challenge is almost entirely about JavaScript compression techniques for random hexadecimal numbers.

Notice that one of the numbers begins with a zero. Handling a leading zero can cost quite a few bytes of code (e.g. .padStart(64,0) is 15 bytes), so it significantly affects what approaches are viable for a competitive solution!

Besides simply compressing hexadecimal, there are two other avenues to exploit that I considered:

- One of the values is the checksum of an empty file. If there was a short enough built-in JavaScript function to get a SHA-256 checksum, we could use it to produce that value. However, this didn’t end up being fruitful, as accessing SHA-256 from JavaScript seems to require a lot of code, and I doubt the Golf Horse environment would even have access to it.

- We’re allowed to produce the list in any order, as long as all 13 values are printed exactly once. It turns out this is a critical factor to produce a competitive solution! There are 13! orderings, about \(6 \cdot 10^9\), which is a decently large search space to optimize over.

Prior work

Before my submission, the #2 and #3 entries shared their implementation: boviner by Hasegawa Sayuri and bovinest by dwrensha. Both are based on the same code, differing only in the compressed data and initial value:

b=0n

for(c of`DATA`)b=b<<7n^BigInt(c.charCodeAt())

console.log(b.toString(16).replace(/6a/g,`\n`))The idea here is as follows:

- A large number is constructed 7 bits at a time from the Unicode values of a string.

- 7 bits was chosen because this range is covered by 1-byte Unicode characters.

- However, some 1-byte Unicode characters can’t occur literally (specifically: backtick, carriage return, and backslash), so they must be escaped or absorbed into the bits of an adjacent 2-byte character.

- Convert the number to a hexadecimal string.

- Split the string at the occurrences of

6a. Note that6adoes not occur anywhere in the required output. - Print the strings.

This solution requires some optimization: we want to avoid using escaped or 2-byte characters in our string. By rearranging the order of the output and selecting a good starting value, this can be entirely avoided: “bovinest” has none.

When thinking about how to approach this problem myself, I wanted to look into these potential areas of improvement:

- This program can encode most 7 bits per byte of source code, requiring at least 475 bytes to represent the 832 hex digits. However, it’s possible to get upwards of 7.14 bits per byte; see my article about packing bits. That would get us to 466 bytes of compressed data, saving 9 bytes. Could >7 bits/byte be achieved with small enough code size to be profitable? (Spoiler: yes!)

- Adding the newlines is costing quite a few bytes. The introduction of the digits

6aat each newline costs 12*8 = 96 bits, translating to 14 bytes of compressed data. Indeed, there’s a modification that doesn’t require introducing extra digits at newlines, saving a net of ~10 bytes; I’ll leave it as an exercise to the reader! - Using BigInts comes with tradeoffs: although it offers a simple way to construct a large string of hex digits, manipulating BigInts requires more code size than regular JavaScript numbers. In particular, the

nsuffix needs to be used for BigInt literals, and theBigInt()constructor is 8 bytes. It could be worthwhile to explore other approaches that produce fewer hex digits at once, but don’t need BigInts.

The first point is where most of the action is, so let’s dive into it next.

Hashing as a compression technique

We’d like to find a program that can (1) produce a number from a string (2) obtain more than 7 bits of number per byte of string. The decoders discussed in my previous article can do this, but are far too large to use in the SIGBOVIK problem, so I needed new ideas.

My key inspiration came from hash functions such as SHA-256 itself. The idea is as follows: let’s say we have a hash function from strings to 256-bit numbers. Assume it’s a high-quality hash, so that the output looks essentially random. The intuition is that a good hash function should preserve the entropy of the input, allowing us to use hashes of short, high-entropy JavaScript strings to encode 256-bit numbers.

Let’s do some concrete calculations to verify the idea. If I have 2^256 different strings, their hashes will cover most possible 256-bit outputs. (There will be collisions, so actually about \(1-1/e\) possible outputs will be covered.) If the strings are short, then we can hash the strings as a way to efficiently produce 256-bit outputs. Let’s do some math to see how long we need strings to be to get 2^256 of them.

Let’s assume that our strings each consist of the following:

- X 1-byte Unicode characters

- Y 2-byte Unicode characters

- Z 3-byte Unicode characters

(We could include 4-byte characters, but the added complexity isn’t worth it for a problem as small as SIGBOVIK. 4-byte characters require the use of codePointAt instead of charCodeAt, and need special handling for indexing if not using a for-of loop.) The length of the string in bytes is L=X+2Y+3Z. There are 128 1-byte Unicode characters, 1920 2-byte, and 61440 3-byte. However, of the 1-byte characters, we have to escape backtick, carriage return, and backslash, (and sometimes dollar sign, if it’s followed by an opening brace) so we effectively have 125 1-byte characters and 1923 2-byte characters. So, our total number of strings is:

\(\displaystyle N=125^X \cdot 1923^Y \cdot 61440^Z \cdot \binom{X+Y+Z}{Y} \binom{X+Z}{Z}\)

We want to maximize N given L. It’s a bit of work which I’ll spare you, but with some approximations and Lagrange multipliers, we find that for large L, X:Y:Z should be around 88:10:2. With these ratios, we get N=2^256 when L=36.3. Therefore, we only need strings of ~36 bytes to generate most 256-bit numbers if we have a good hash function. That puts us at 256/36.3 = 7.05 bits/byte, beating our benchmark of 7 bits/byte. Furthermore, if we allow X:Y:Z to vary, and L gets larger, it trends up to 7.14 bits/byte.

So, this validates the idea that a good hash function is all we need to efficiently compress bits. Our next steps are to find a good enough hash function, and then figure out what inputs to the hash function gets us the SIGBOVIK data as output.

Designing a hash function

Hash functions are a fundamental tool in computer programming, so there’s a huge literature around designing such functions with different tradeoffs. For our purposes, we need something that (1) hashes strings to numbers (2) has reasonably well-distributed outputs (3) has extremely tiny code size.

There are two general approaches to consider: using BigInts or not. With BigInts, we can generate an entire 256-bit (i.e., 64-hex) hash at once. Without them, we would need to concatenate multiple smaller hashes. Although I had a crazy idea for a potentially competitive BigInt-based solution, it looked very hard to write an encoder, so I’ll relegate that to an appendix and describe the non-BigInt approach.

The general design is going to look like this:

- Pick an initial value S to serve as the hash state.

- Read a character C from the string containing compressed data.

- Hash together S and C, and store to S.

- Output one or more hex digits from S.

- Repeat 2–4 until we have all our hex digits.

It’s important to balance the rate that we read in data (step 2) and the rate that we extract data (step 4). Let’s do some quick math. Using the theory of Shannon entropy, we can calculate how many bits each character is worth. Recall the ratio of 88:10:2 for 1, 2, and 3-byte characters. The entropy is:

\(\displaystyle -0.88\log_2(0.88/125) -0.1\log_2(0.1/1923) -0.02\log_2(0.02/61440)\)

Which comes out to about 8.15 bits (actually a bit less, if we use the unrounded values for the 88:10:2 ratio). Conveniently, that’s just enough to get 2 hex digits.

So, let’s design a program that outputs 2 hexes per character of input:

w=""

s=1

for(i=1;i%429;)

{

s=hash(s,DATA.charCodeAt(i))

w+=i++%33?s.toString(16).slice(-2):`\n`

}

console.log(w)(This is not fully “golfed”, but I wanted to keep it somewhat readable.) However, the .slice(-2) is costly; it’s shorter to output 1 hex at a time:

w=""

s=1

for(i=1;i%845;)

{

s=hash(s,DATA.charCodeAt(i/2))

w+=i++%65?(s&15).toString(16):`\n`

}

console.log(w)Note that .charCodeAt(i/2) will cause us to read and hash each character twice. Although it may seem strange, there’s no problem with it.

With this template, we just need to provide the function hash and the string DATA—easier said than done! Let’s focus on hash first.

When building a hash function, some of the primary operations to consider are:

- XOR, addition, or subtraction to mix two values together, but there’s little (or none, in the case of XOR) interaction between low and high bits

- Bit shift and rotation to move low bits to high bits, and vice-versa

- Multiplication by an integer constant, which does a good job mixing higher bits, but doesn’t mix low bits well

Other operations are possible but tend to have drawbacks. For example, bitwise AND will lose a lot of entropy if one value has a lot of 0 bits.

With the extremely limited code size we’re working with, I figured we only have room for a couple operations. And it’s tough to build something that mixes bits well with only two operations. However, as I was experimenting, I realized JavaScript allowed a nice trick that helps to mix low and high bits at once: numbers are always floating-point, so I can multiply by a fractional number. Choosing 9.1 as a multiplier, the multiplication by 9 helped with mixing higher bits, while the multiplication by .1 would mix high bits with low bits—exactly what we need in a good hash function. Thus, I arrived at

function hash(x,y)

{

return x*9.1^y;

}Note that in JavaScript, the bit operations truncate numbers to a 32-bit signed integer. So, we multiply by 9.1 to get a well-scrambled float, then the XOR operation brings us back to a 32-bit integer. The conversion to 32 bits is important, otherwise continually multiplying by 9.1 will cause the number to keep growing larger, losing precision and eventually overflowing.

Ultimately, it was only through empirical testing that I could confirm this hash function was sufficient. In fact, the constant of 9.1 could be replaced with many other equally effective numbers. One might even hope that we could save a byte with division instead:

function bad_hash(x,y)

{

return x/.9^y;

}However, my testing confirmed that single-digit denominators made inferior hash functions, so this can’t save a byte.

Note: My first two submissions used a hash of the form s^=9.1*s^[character code]. After writing the initial version of this article, I realized I missed a one-byte savings opportunity; the ^= could just as well be =. My third and fourth submissions made this change, so that the hash has the form s=9.1*s^[character code].

Writing the encoder

Now, we are left with the challenge of finding the DATA which will hash into the SIGBOVIK hex numbers in as few bytes as possible. Note that the DATA has a fixed length in characters, but we are trying to optimize the length in bytes: Unicode characters can be anywhere from 1 to 4 bytes each (though we won’t use 4-byte characters in our submission).

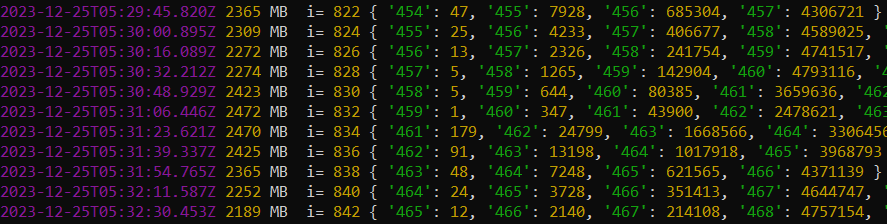

I could not find any mathematical structure that would facilitate an efficient encoder. Instead, I went for a “brute force” approach, utilizing dynamic programming to search through all possibilities for the shortest strings, one character at a time. Because of the size of the search space, however, I couldn’t perform a complete search, so I may not have found an optimum solution.

Here is an outline of my encoder:

- Our state space consists of (1) the current value of the variable

s(2) which of the 13 numbers we have completed (3) which of the 13 numbers we are currently encoding. For each state, keep a record of the string used to get there. - Initialize a cache of states consisting of valid starting states.

- For each state in the cache, and for every 1, 2, and 3-byte Unicode character, check that it will produce the next two hex digits we want. If so, then add the new state to the cache. Remove all the old states from the cache.

- After completing a 64-hex number, try each possible next number and add to the state cache. (Note that it’s quite valuable to try all possible orders: a favorable order saves a few bytes.)

- Repeat 3-4 until complete.

- Output the best solution in the cache.

Because the state space is gigantic (s is 32 bits, plus about 14 bits to store the completed and current numbers, minus 4 bits of s that were forced by the last digit we encoded), we must limit the size of the cache. We only keep the entries with the lowest number of bytes. I was able to go up to about 5 million entries while maintaining a run time of less than 4 hours on my computer.

Another possible dimension to search is the hash function’s multiplier. I only made a modest effort to search it, only looking for multipliers that got lucky early in the encoding.

I expect that this is enough to get within 1 byte of optimal, but that’s just a conjecture based on the diminishing returns I was seeing. Had I been more motivated, I believe one could get multiples more speed in a lower-level language such as C++, allowing more extensive searches.

Overall, this innocent-looking code golf challenge proved to have substantial depth. I hope I can generalize the techniques I discovered here to other golf challenges!

Appendix I: A few golf tricks

Our somewhat-un-golfed code looks like this:

w=""

s=1

for(i=1;i%845;)

{

s=s*9.1^DATA.charCodeAt(i/2)

w+=i++%65?(s&15).toString(16):`\n`

}

console.log(w)We can save some bytes by combining initializations cleverly:

- We set

wto 2. This will get cast into a string, becoming the first hex digit “2”. - We set

ito 2. Becausewalready has its first character, we can skip overi=1. - We set

sto 2. It’s the initial value of the hash, so it can be anything we want (provided the encoder knows it, too!).

Thus:

for(w=s=i=2;i%845;)

{

s=s*9.1^DATA.charCodeAt(i/2)

w+=i++%65?(s&15).toString(16):`\n`

}

console.log(w)To arrive at the code in my first three submissions, simply move the w+=... line into the for-statement, allowing removal of the braces; and replace the \n with an actual newline character.

As I continued to search for golf tricks, I eventually realized the loop termination condition could be combined with fetching the compressed data:

for(w=s=i="";c=DATA.charCodeAt(i/2);)

{

s=s*9.1^c

w+=++i%65?(s&15).toString(16):`\n`

}

console.log(w)The loop will terminate when it reads past the end of the compressed data (c will become undefined), or if there’s a 0 in the compressed data. This saves only 1 byte of code, but it also eliminates the unused first byte of the compressed data string, saving a character. On the other hand, we have to eliminate any 0’s from the compressed data, requiring us to re-encode everything. That turns out to be a favorable tradeoff, and so I used this optimization for my fourth and final submission.

Appendix II: A possible BigInt method

I had another totally unrelated method that could potentially achieve the same program length. The idea was to append together a bunch of 7-digit numbers to make a BigInt, and then print the last 64 hex digits, hoping that the last 64 hex digits is a good-enough hash of the input data. However, that is just a conjecture; it might not end up working well. But I thought it was interesting enough that I wanted to share the idea anyway:

for(w=i="429";i;i%33||console.log(BigInt(w).toString(16).slice(-64)))w+=ENCODED.charCodeAt(--i)+1e6 What I really liked about this is the abuse of JavaScript’s weak typing: the w+=... looks like numeric addition, but it’s actually string concatenation.

I estimated that the encoder for this method would be even harder than the one above; it involves some number theory and knapsack solving! However, the estimated number of bytes is the same as the above approach. So, this seemed like a harder way to achieve the same thing.

Appending III: More improvements

(Added 28-Apr-24) I made some more progress with auroch_v5 and auroch_v6, which get their own article.